Scaling Generative Pre-training for User Ad Activity Sequences

17th AdKDD (KDD 2023 Workshop)

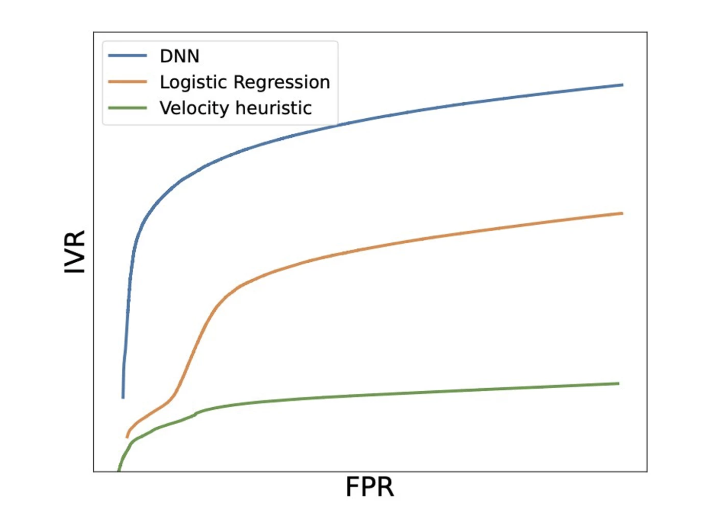

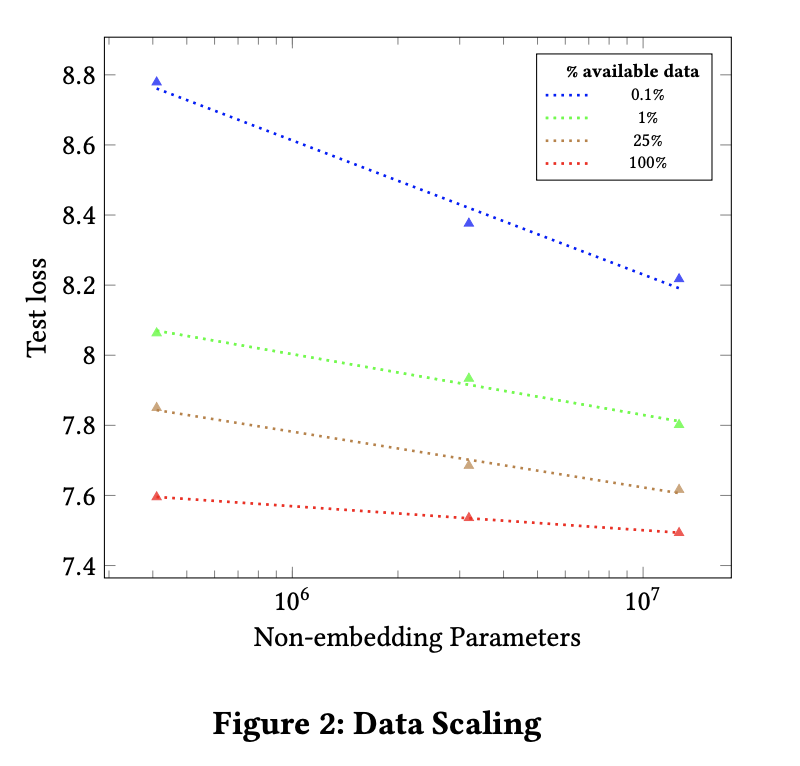

We demonstrate scaling properties across model size, data and compute on User Activity Sequences data. Larger models show better downstream task performance on response prediction and bot detection.